Load balancing with Consul & Nginx

The last few weeks I played with Docker, Consul and other tools, so I tried to load-balance multiple Docker containers with an API. I decided to share with you how I built the system so I wrote this blog post. I hope you find it useful in some way. We will use Consul, Docker, Docker compose, Nginx, Python and Supervisord to create a load-balancing system that gets its configuration updated when the group that load-balance scales up or down.

Requirements

The only requirements on your machine are Docker and Docker compose. You can follow the docker installation guide here. To install Docker compose you can use pip install docker-compose. Remember to use a virtual environment so you don’t pollute your global python environment.

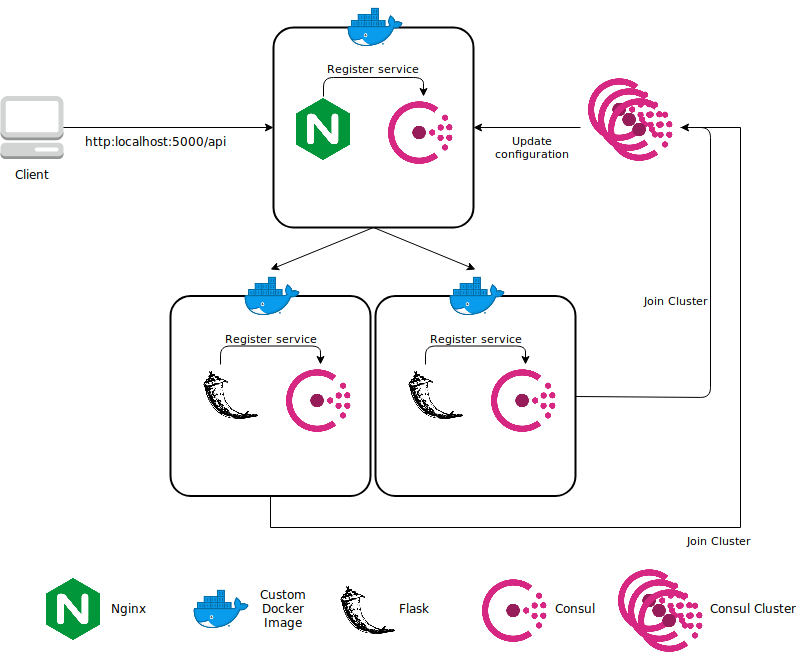

An overview

In the system there is at least 5 containers running:

- 1 Load balancer

- 1 API (two in the figure)

- 3 Consul Servers (forming a cluster)

Each container have it’s own Consul agent running, where the service is registered. Then the local Consuls contact with the Consul cluster. On every update of the API service group, the load balancer gets its configuration updated, so it knows to which containers should redirect the requests.

Base image

Since all our Docker container will be running common services, we can create a base image. As we will running multiple services inside our Docker containers, we will use a service manager. In this case, a option that Docker gives in their documentation is to use Supervisord to manage multiple services.

So our base image will contain:

- Supervisord: managing processes.

- Consul agent: will communicate with the Consul cluster.

- Register service: a “one-shot” process to register the service into the local Consul agent.

Base Dockerfile

# Dockerfile

FROM ubuntu:18.04

# Same exposed ports than consul

EXPOSE 8301 8301/udp 8302 8302/udp 8500 8600 8600/udp 8300

# unzip and wget for consul installation, python3 for supervisord

RUN apt-get update && apt-get install unzip wget python3 python3-distutils -y

# Pip the PyPA way, setuptools and wheel (note the --no-cache-dir to prevent pollution)

RUN wget -q https://bootstrap.pypa.io/get-pip.py -O /tmp/get-pip.py && \

python3 /tmp/get-pip.py pip setuptools wheel --no-cache-dir

# Supervisord from PyPI doesn't support Python 3, so I download the master branch from GitHub

RUN python3 -m pip install https://github.com/Supervisor/supervisor/archive/master.zip --no-cache-dir && \

mkdir -p /var/log/supervisor /etc/supervisor/conf.d/

# Download consul and move it to the PATH

ENV CONSUL_URL https://releases.hashicorp.com/consul/1.2.2/consul_1.2.2_linux_amd64.zip

RUN wget -q $CONSUL_URL -O /tmp/consul.zip && unzip /tmp/consul.zip && mv /consul /bin/consul && \

mkdir /etc/consul.d/

# Configuration for starting the consul process, for the one shot registering and for

# supervisord itself

COPY consul.conf /etc/supervisor/conf.d/consul.conf

COPY register.conf /etc/supervisor/conf.d/register.conf

COPY supervisord.conf /etc/supervisor/supervisord.conf

ENTRYPOINT ["supervisord", "-c", "/etc/supervisor/supervisord.conf"]

# Cleanup!

RUN apt-get clean && rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*

The Dockerfile:

#. expose some ports for Consul communications.

#. install unzip, python and wget so we can install and run things.

#. install packages for python: pip, setuptools and wheel.

#. install supervisord to manage processes.

#. install Consul.

#. add the configuration files for supervisord.

#. set the entrypoint to use supervisord.

#. clean the packages lists and the temporal directory.

Supervisord configuration

# supervisord.conf

[unix_http_server]

file = /tmp/supervisor.sock ; the path to the socket file

[supervisord]

logfile = /var/log/supervisor/supervisord.log

logfile_maxbytes = 50MB ; max main logfile bytes b4 rotation; default 50MB

logfile_backups = 10 ; # of main logfile backups; 0 means none, default 10

loglevel = info ; log level; default info; others: debug,warn,trace

pidfile = /var/run/supervisord.pid

nodaemon = true

minfds = 1024 ; min. avail startup file descriptors; default 1024

minprocs = 200 ; min. avail process descriptors;default 200

directory = /tmp

[rpcinterface:supervisor]

supervisor.rpcinterface_factory = supervisor.rpcinterface:make_main_rpcinterface

[supervisorctl]

serverurl = unix:///tmp/supervisor.sock ; use a unix:// URL for a unix socket

[include]

files = /etc/supervisor/conf.d/*.conf

There are two remarcable things in the file: nodaemon = true, so it will run in foreground and the [include] section to load the files inside /etc/supervisor/conf.d/.

Consul + Supervisord

# consul.conf

[program:consul]

command = consul agent -retry-join=%(ENV_CONSUL_SERVER)s -data-dir /tmp/consul -config-dir /etc/consul.d

stdout_capture_maxbytes = 1MB

redirect_stderr = true

stdout_logfile = /var/log/supervisor/%(program_name)s.log

We run the Consul agent which will try to connect to the defined CONSUL_SERVER. Also, we set the data and configuration directories. Finally we redirect the stdout and stderr to the file /var/log/supervisor/consul.log.

Register + Supervisord

# register.conf

[program:register]

command = register

startsecs = 0

autorestart = false

startretries = 1

redirect_stderr = true

stdout_logfile = /var/log/supervisor/%(program_name)s.log

This service will be the responsible for registering the service running in the container into the local Consul. The service expects an executable in the PATH called register. As will run only once, the executable itself should take care or retries registering the service.

Building the image

We put all these files into a folder, mine called base-image, and inside it run:

$ docker build . -t base-image:latest

This will create the Docker image so you can use it in other images or start a container from it.

The API

With the base image built, we can build the API on top of it. I’ve created the API using Python and the framework Flask, but you can implement it in the language that you want, of course.

API with Flask

# app.py

import socket

from flask import Flask

from flask import jsonify

app = Flask(__name__)

@app.route("/hostname")

def hostname():

return jsonify({"hostname": socket.gethostname()})

if __name__ == '__main__':

app.run()

The API consists of a single endpoint in /hostname which will return the hostname of the Docker container, so we can determine if the load-balancer is working correctly.

Register script

# register.py

#! /usr/bin/env python3

import time

import requests

consul_register_endpoint = "http://localhost:8500/v1/agent/service/register"

template = {

"name": "api",

"tags": ["flask"],

"address": "",

"port": flask_run_port,

"checks": [

{"http": "http://localhost:5000/hostname", "interval": "5s"}

]

}

for retry in range(10):

res = requests.put(consul_register_endpoint, json=template)

print("Attempt num:", retry, "Response Status:", res.status_code)

if res.status_code == 200:

print("Registering successful!")

break

else:

print(res.text)

time.sleep(1)

else:

print("Run out of retires. So something went wrong.")

I’ve implemented the register executable using Python. It will try to register the service into the local Consul agent 10 times, after that it will stop. There is nothing magic about it: a simple PUT requests to the /v1/agent/service/register of Consul, passing the correct payload. Here, we tell Consul to check the health of our service contacting the /hostname endpoint, but you can create another endpoint for the health check.

Flask + Supervisord

# flask.conf

[program:flask]

command = flask run --host=0.0.0.0

directory = /app/

stdout_capture_maxbytes = 1MB

redirect_stderr = true

stdout_logfile = /var/log/supervisor/%(program_name)s.log

We set the service to start at /app/ directory, where we will copy the files of our API. We start the API using flask run --host=0.0.0.0, so we should set the environment variable FLASK_APP, we will do so in the Dockerfile.

API Dockerfile

# Dockerfile

FROM base-image:latest

ENV PYTHONUNBUFFERED=1 LC_ALL=C.UTF-8 LANG=C.UTF-8 FLASK_APP=app.py

RUN mkdir /app

WORKDIR /app

RUN python3 -m pip install Flask requests -U

ADD . /app/

COPY flask.conf /etc/supervisor/conf.d/flask.conf

COPY register.py /bin/register

RUN chmod +x /bin/register

Since we have a lot of functionallity in the base image, here we only install our API, define a few variables, install some dependencies and copy the register script and the service configuration.

Running and testing the API

Now we have all the files to build our API image and run it:

$ docker build . -t api:latest

# We should define the CONSUL_SERVER, so it wont crash

$ docker run -d --rm -e CONSUL_SERVER=localhost --name api -p 5000:5000 api:latest

$ curl localhost:5000/hostname

{"hostname":"f9e373e68d2e"}

Another thing done! Let’s follow :)

The Load Balancer

Load balancer Dockerfile

# Dockerfile

FROM base-image:latest

# APT tasks

RUN apt-get update && apt-get install gnupg -y

# Install nginx

COPY nginx.list /etc/apt/sources.list.d/nginx.list

RUN apt-key adv --keyserver keyserver.ubuntu.com --recv-keys ABF5BD827BD9BF62

RUN apt-get install nginx -y

# Consul template

RUN wget -q https://releases.hashicorp.com/consul-template/0.19.5/consul-template_0.19.5_linux_amd64.zip -O consul-template.zip && \

unzip consul-template.zip && mv consul-template /bin/consul-template && rm consul-template.zip

RUN mkdir -p /etc/consul-templates/

COPY load-balancer.tpl /etc/consul-templates/load-balancer.tpl

# Our application

RUN python3 -m pip install requests --no-cache-dir

RUN useradd nginx

COPY nginx.conf /etc/nginx/nginx.conf

COPY default.conf /etc/nginx/conf.d/default.conf

# Supervisor files

COPY consul-template.conf /etc/supervisor/conf.d/consul-template.conf

COPY supervisor-nginx.conf /etc/supervisor/conf.d/nginx.conf

COPY register.py /bin/register

# Apt cleanup

RUN apt-get clean && rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*

The load balancer needs Consul template too, apart from Nginx. Also, since we are Installing Nginx from the official Nginx repositories, we need to add them to the list and add their key. We copy the register script and configuration files for Nginx too.

Consul template + Supervisord

# consul-template.conf

[program:consul-template]

priority = 1

command = consul-template -template "/etc/consul-templates/load-balancer.tpl:/etc/nginx/conf.d/load-balancer.conf:supervisorctl restart nginx"

stdout_capture_maxbytes = 1MB

redirect_stderr = true

stdout_logfile = /var/log/supervisor/%(program_name)s.log

The ‘magic’ happens here: we run consul-template which will render the template into the nginx configuration directory and run supervisorctl to restart the Nginx service, so the configuration is reloaded.

Load balancer template

# load-balancer.tpl

{% raw %}

upstream backend {

least_conn;

{{ range service "api|passing" }}

server {{ .Address }}:{{ .Port }};{{ end }}

}

{% endraw %}

server {

listen 5000;

server_name localhost;

location / {

proxy_pass http://backend;

}

}

In the template, we are getting the Address and port of all services under the ‘api’ name. We are filtering them by ‘passing’ status, so we won’t add any unhealthy API to the load balancing.

Nginx + Supervisord

# supervisor-nginx.ini

[program:nginx]

command = nginx

autostart = true

autorestart = unexpected

exitcodes = 0

redirect_stderr = true

stdout_logfile = /var/log/supervisor/%(program_name)s.log

Start Nginx!

Nginx configuration files

# default.conf

server {

listen 80;

server_name localhost;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

The default file, serving on 80 the welcome page of Nginx.

# nginx.conf

user nginx;

worker_processes 1;

daemon off;

error_log /dev/stdout warn;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /dev/stdout main;

sendfile on;

keepalive_timeout 65;

include /etc/nginx/conf.d/*.conf;

}

The main configuration file of Nginx. Note the daemon off and the include *. The former will prevent nginx to go to the background and the latter will include the files placed in /etc/nginx/conf.d/ where consul-template will be rendering the template. Also, we send the output and errors to the /dev/stdout so Supervisord gets the logs.

# nginx.list

deb http://nginx.org/packages/ubuntu/ bionic nginx

deb-src http://nginx.org/packages/ubuntu/ bionic nginx

The repositories…

Register executable

# register.py

#! /usr/bin/env python3

import requests

import time

consul_register_endpoint = "http://localhost:8500/v1/agent/service/register"

template = {

"name": "nginx",

"tags": ["nginx"],

"address": "",

"port": 80,

"checks": [

{

"http": "http://localhost:80",

"interval": "5s"

}

]

}

for retry in range(10):

res = requests.put(consul_register_endpoint, json=template)

print("Attempt num:", retry, "Response Status:", res.status_code)

if res.status_code == 200:

print("Registering successful!")

break

else:

print(res.text)

time.sleep(1)

else:

print("Run out of retires. So something went wrong.")

In this case, we use the 80 port to check the health, while the requests will be sent to the 5000.

##Docker compose

The final piece of the puzzle: the docker-compose.yml file, which contains all our architecture.

# docker-compose.yml

version: '3.7'

services:

server-1:

image: consul

command: consul agent -server -bootstrap-expect=3 -data-dir /tmp/consul -node=server-1

server-2:

image: consul

command: consul agent -server -bootstrap-expect=3 -data-dir /tmp/consul -retry-join=server-1 -node=server-2

server-3:

image: consul

command: consul agent -server -bootstrap-expect=3 -data-dir /tmp/consul -retry-join=server-1 -node=server-3

consul-ui:

image: consul

command: consul agent -data-dir /tmp/consul -retry-join=server-1 -client 0.0.0.0 -ui -node=client-ui

ports:

- 8500:8500

api:

image: api:latest

environment:

- CONSUL_SERVER=server-1

load-balancer:

image: load-balancer:latest

environment:

- CONSUL_SERVER=server-1

ports:

- 5000:5000

The docker compose defines a series of services:

#. 3 consul servers running as such, connecting to the docker named server-1 (could be less).

#. 1 consul agent running with as the UI, exposing its port 8500 to the local machine.

#. The API with the CONSUL_SERVER defined as server-1.

#. The Load Balancer with the CONSUL_SERVER defined as server-1 and the 5000 port open to the local machine.

Running the whole

Now it’s to time to docker-compose to run!

$ (cd base-image && docker build . -t base-image:latest)

$ (cd api && docker build . -t api:latest)

$ (cd load-balancer && docker build . -t load-balancer:latest)

$ docker-compose up -d

$ firefox http://localhost:8500

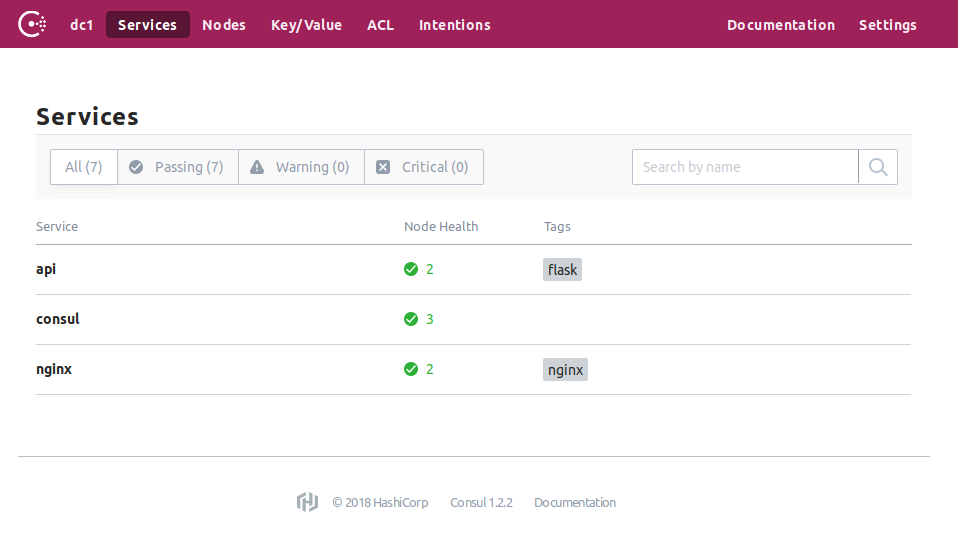

Probably you will see the Consul UI with an error. Be patient and refresh, you should see something like this:

If you are not seeing this, check the logs with docker-compose logs [load-balancer|api], which will output the Supervisord log. For see each service log run docker-compose exec [load-balancer|api] supervisorctl and play with the Supervisord interactive console.

If all is running correctly, you should see this working.

$ curl http://localhost:5000/hostname

{"hostname":"XXXXXXXXXX"}

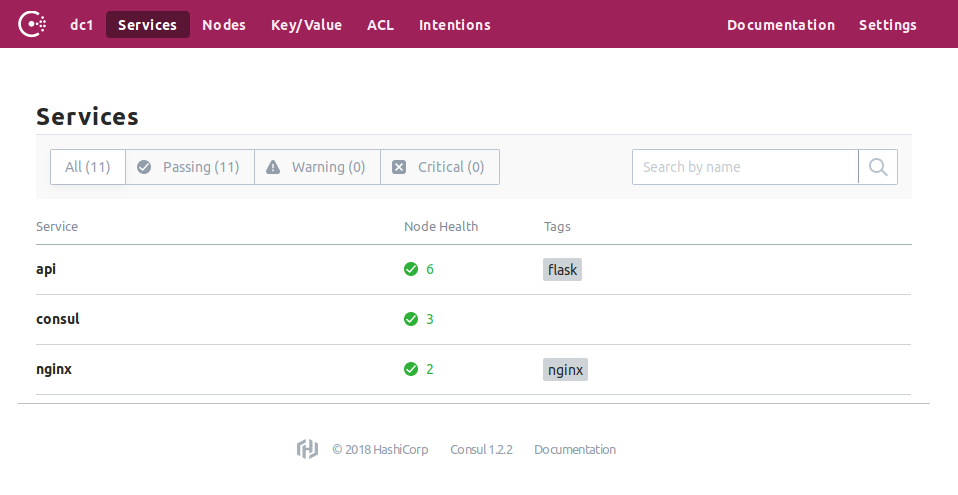

Scaling the API

The next step is to scale our API and check if the load-balancer gets updated. We can check the Nginx configuration file before scaling.

$ docker-compose exec load-balancer cat /etc/nginx/conf.d/load-balancer.conf

upstream backend {

least_conn;

server 172.19.0.3:5000;

}

server {

listen 5000;

server_name localhost;

location / {

proxy_pass http://backend;

}

}

Now, we run:

$ docker-compose up -d --scale api=3

And three APIs more will be created.

$ docker-compose exec load-balancer cat /etc/nginx/conf.d/load-balancer.conf

upstream backend {

least_conn;

server 172.19.0.9:5000;

server 172.19.0.8:5000;

server 172.19.0.3:5000;

}

[...]

Great! The configuration file is now updated :)

The number of APIs in the Consul UI has increased also. And if we launch a lot of petitions to the localhost:5000…

$ curl http://localhost:5000/hostname

{"hostname":"6215fd5a5651"}

$ curl http://localhost:5000/hostname

{"hostname":"16f52ff4f50e"}

$ curl http://localhost:5000/hostname

{"hostname":"ef97072c7cca"}

It works :)

Scaling down the API

Now image that the service is getting less petitions, so it auto-scales down the number of API containers. We will do it using Docker compose:

$ docker-compose up -d --scale api=2

And one API containers will be stopped and removed. The configuration file updated, and the curls will return only two hostnames.

$ curl http://localhost:5000/hostname

{"hostname":"1c3b38502212"}

$ curl http://localhost:5000/hostname

{"hostname":"ef97072c7cca"}

Summary

We created a system with a load balancer that updates when the load balanced group changes using Consul, Consul template and Nginx. In my case, I built the API with Python and Flask, but experiment and build your own test API with other frameworks! We ran all in Docker containers, orchestrated by Docker compose. You can check out the repository at

I hope you learned something new! If not, leave a comment and ask for content!

It was useful? Done something similar? Have feedback?